In today’s fast-paced digital landscape, where innovation is the lifeblood of enterprise success, a lurking threat emerges from the shadows, threatening to compromise trust and intellectual property (IP). This ominous force, known as the Shadow AI Crisis, quietly infiltrates organizations, operating without oversight and beyond the boundaries of established protocols.

As companies rapidly integrate artificial intelligence into their operations, the risk of unregulated and unauthorized AI tools becomes an ever-pressing concern. With these shadowy systems at play, businesses must confront the dual challenge of preserving proprietary information while fostering a culture of transparency and accountability.

Introduction: The Elephant in the Server Room

It starts with a simple intention: efficiency. A developer wants to debug a script faster. A marketing manager needs to summarize a 50-page PDF before a meeting. But when IT says “no,” they don’t stop they go underground.

Over 68% of employees now admit to using AI tools secretly at work, often driven by the fear of falling behind or being replaced if they aren’t hyper-productive.

The Core Problem

Most enterprises I work with are currently living in a state of delusion. They believe that because they blocked ChatGPT on the corporate firewall, they are safe. The reality is that innovation has simply moved to personal smartphones and 4G networks, leaving the organization with zero visibility and maximum risk.

Shadow AI is no longer just a compliance nuisance; it is a full-blown trust crisis that pits security teams against the very workforce they are trying to protect.

In today’s enterprise environment, the Shadow AI Crisis is no longer a hidden problem it’s an operational reality. As organizations struggle with visibility, shadow AI discovery has become critical to understanding how employees are quietly using generative tools outside approved systems. What often begins as a productivity shortcut can quickly escalate into one of the ten high-risk shadow AI scenarios, exposing sensitive data, intellectual property, and organizational trust.

What is Shadow AI? Shadow AI refers to the unsanctioned use of generative AI tools (like public LLMs, unauthorized chatbots, or browser extensions) by employees, outside of approved IT or security controls. Unlike traditional Shadow IT, Shadow AI poses unique risks regarding training data absorption, IP leakage, and output hallucination.

Thesis Statement

You cannot police your way out of this crisis. If you treat your employees like adversaries, they will hide their best work from you. Trust, enablement, and transparent governance not draconian bans are the only sustainable path to AI security.

Why “Protective” AI Controls Often Backfire

The Vicious Cycle of Distrust

In my experience consulting with Fortune 500s, I’ve seen the same pattern play out repeatedly. It’s a vicious cycle that actually increases security risks:

- The Ban: IT blocks all public AI domains (OpenAI, Anthropic, etc.).

- The Workaround: Employees, desperate to maintain productivity, switch to personal laptops or mobile devices.

- The Blind Spot: Sensitive data now flows over private 5G networks, completely bypassing the corporate DLP (Data Loss Prevention) stack.

- The Crackdown: Management installs stricter surveillance (“tattle-ware”) to detect AI-generated text.

- The Collapse: Morale plummets. Employees stop sharing their innovative use cases for fear of punishment.

The Misuse of “Zero Trust”

There is a fundamental misunderstanding of “Zero Trust” in the AI era. Zero Trust is a network architecture model, not a people management strategy.

When you apply a “Zero Trust” mindset to human culture treating every employee as a potential leaker who must be watched you guarantee that innovation will happen in the shadows. Security works best when employees feel they are partners in protection, not suspects.

The Cost of Distrust

Sanctioned Tool Abandonment: If your approved internal tools are clunky compared to public ChatGPT, and you punish people for complaining, you waste millions on shelf-ware.

Innovation Stagnation: The teams finding the most creative ways to use AI are often the ones most afraid to tell you about it.

The Real Enterprise Risks: IP, Data, and Legal Exposure

You might ask, “If it helps them work faster, why do we care?” The answer lies in how Generative AI processes information.

How Shadow AI Leaks Intellectual Property

Shadow AI leaks intellectual property because public, consumer-grade AI models often retain user inputs to train future versions of the model. When an employee pastes proprietary code or strategy documents into these tools, that data leaves the enterprise’s legal control and can potentially be resurfaced in response to a competitor’s prompt.

Primary Risk Vectors

1. Training Data Absorption

This is the nightmare scenario. If your lead engineer pastes a block of proprietary source code into a free, public LLM to “optimize” it, that code may become part of the model’s training corpus. Six months later, a competitor asking for “code to solve X problem” might receive a snippet of your patented algorithm.

2. Prompt Leakage

Even if the model doesn’t train on the data, the infrastructure might log it. Shadow AI often involves “middle-man” browser extensions (e.g., “Free PDF Chat”) that route data through insecure third-party servers before it even reaches the LLM provider.

3. Output Contamination & Legal Ambiguity

Who owns the code generated on a personal ChatGPT Plus account paid for by the employee? Recent rulings suggest AI-generated content cannot be copyrighted. By allowing Shadow AI, you are slowly filling your codebase and content library with assets you may not legally own. If that code makes it into your production software, do you own the copyright?

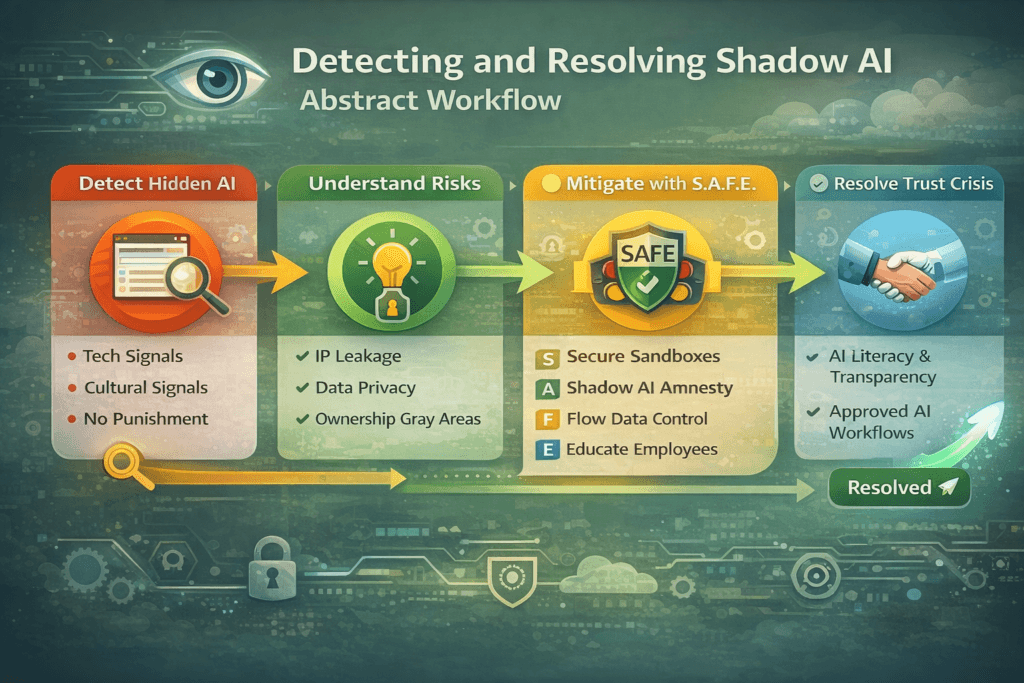

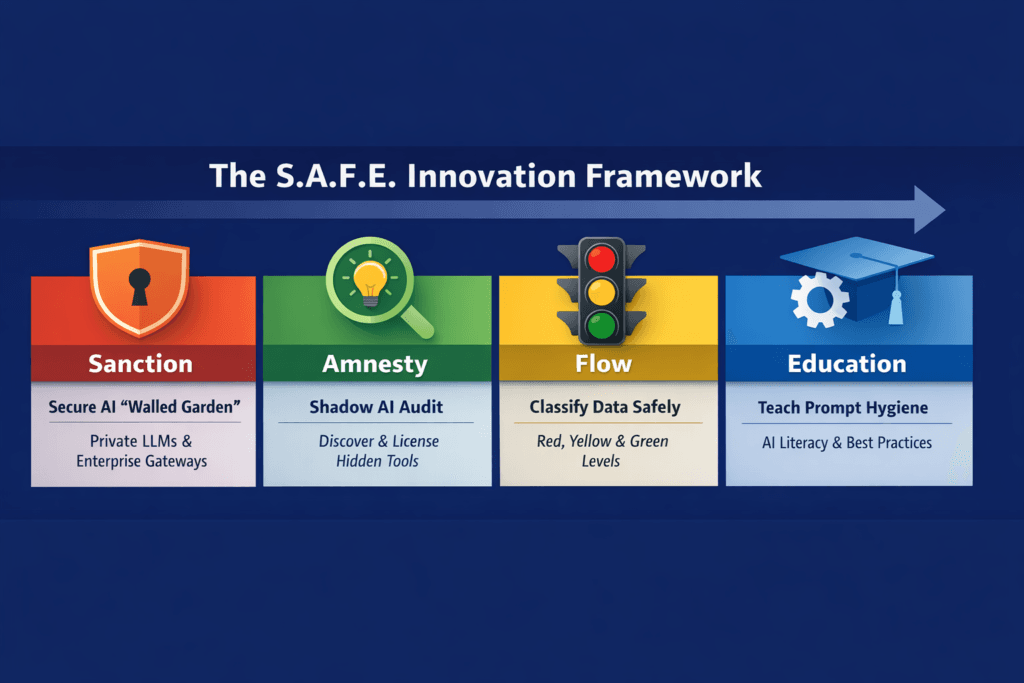

The S.A.F.E. Innovation Framework

To fix this, we need to move from a posture of Prevention to a posture of Enablement. I developed the S.A.F.E. Framework to help organizations rebuild trust while securing their IP.

1. Sanction – Build a Secure AI “Walled Garden”

You cannot beat something with nothing. If you ban ChatGPT, you must provide a better, safer alternative.

- Action: Deploy an Enterprise AI Gateway. This acts as a proxy. Employees log in with corporate SSO, and the gateway strips PII (Personally Identifiable Information) before sending the prompt to a private instance of GPT-4 or Claude.

- The Promise: “Use our internal tool. It’s the same smarts as ChatGPT, but your data is never trained on.”

2. Amnesty – Bring Shadow Usage into the Light

This is the hardest but most crucial step.

- The “Shadow AI Audit”: Announce a 2-week Amnesty period. Ask employees to disclose what tools they are using and why.

- The Guarantee: “No one will be fired or reprimanded for past usage disclosed during this period. We want to know what tools you need so we can buy enterprise licenses for them.”

3. Flow – Classify Data, Not People

Stop trying to classify every user and start classifying the data flows. Use a simple Traffic Light System: The Shadow AI Crisis: How to Rebuild Trust and Secure IP in the Enterprise (2025 Guide)

| Signal | Data Type | AI Permission |

| 🔴 Red | PII, Trade Secrets, Unreleased Financials | Strictly Prohibited or Local-Only Models (e.g., Llama 3 on-prem) |

| 🟡 Yellow | Internal Memos, Draft Code, Process Docs | Enterprise Sandbox Only (Data is discarded after session) |

| 🟢 Green | Public Marketing Copy, Published Blogs, General Knowledge | Public AI Allowed (Standard tools okay) |

4. Education – Teach Prompt Hygiene

Compliance training is usually boring. Replace it with “AI Literacy.” Teach Hallucination Awareness: Ensure employees know that AI is a reasoning engine, not a knowledge database. Teach Data Anonymization: Instead of pasting “Client X’s Q3 Revenue is $5M,” teach them to paste “Company A’s Revenue is $Y.”

A Practical, Compliant Policy Workflow

Let’s look at a real-world scenario to see how this shifts the workflow.

Scenario: A Senior Product Marketing Manager needs to summarize a confidential 80-page product roadmap PDF to create a launch strategy.

The Wrong Way (Shadow AI)

- Action: The manager finds a free website called “ChatWithPDF.io” (fictional example).

- Risk: They upload the confidential PDF. The site’s Terms of Service state they own all uploaded data.

- Outcome: The roadmap is now on a third-party server. The manager gets the summary, but the IP is compromised.

The Trusted Way (S.A.F.E. Protocol)

- Outcome: The summary is generated. The PDF is deleted from memory immediately after the session. The manager gets their work done safely.

- Action: The manager logs into the company’s “Internal AI Portal.”

- Process: They upload the PDF. The portal’s PII Scrubber automatically redacts specific project codenames and client names.

- Security: The prompt is sent to a private Azure OpenAI instance via API (governed by a “Zero Data Retention” agreement).

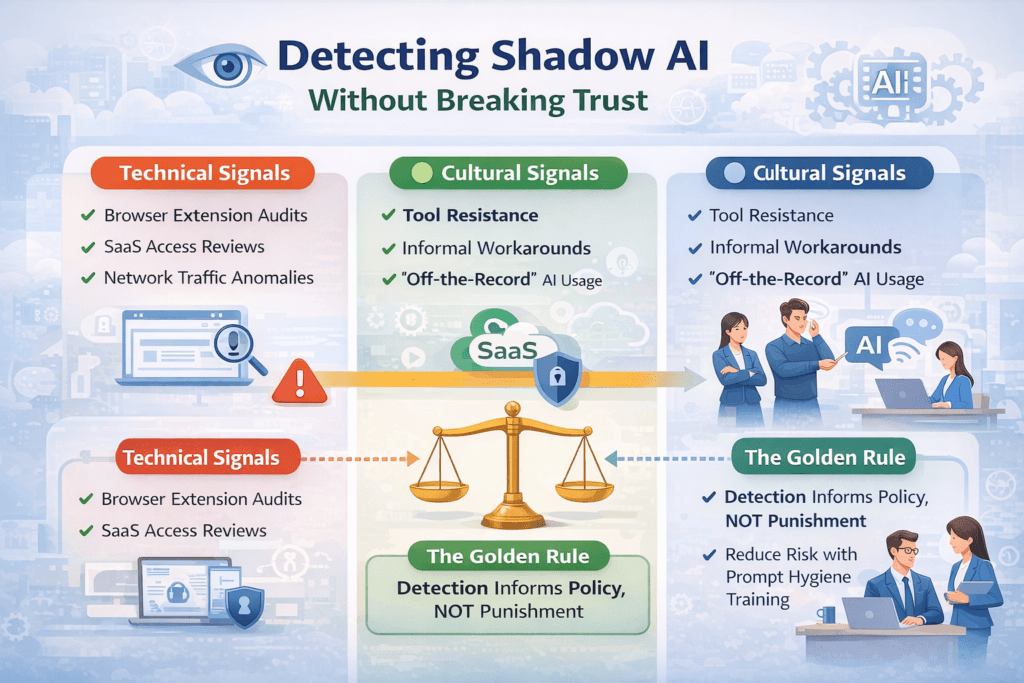

Detecting Shadow AI Without Breaking Trust (Shadow AI Discovery)

You still need to detect risks, but the goal of detection changes. You are looking for gaps in your tooling, not villains in your workforce.

Technical Signals

- CASB/SWG Logs: Look for high-volume traffic to domains like

chatgpt.com,claude.ai, or obscure “AI writng” extensions. - Browser Extensions: Audit the extensions installed on corporate browsers. Many “productivity” plugins are essentially keyloggers that send data to AI backends.

Cultural Signals

- “Magic” Turnarounds: If a junior developer suddenly starts submitting advanced code at 3x their normal speed, they are likely using AI.

- The Silence: If no one is asking IT for AI tools, it doesn’t mean they aren’t using them. It means they are hiding them.

The Golden Rule of Detection

Detection should inform procurement, not punishment. If you see 50 employees using a specific unauthorized tool (e.g., Midjourney), that is a signal to buy an Enterprise License for that tool, not to block it.

Rebuilding Organizational Trust and Culture

Leadership needs to kill the “Replacement Anxiety.”

- Old Narrative: “AI will cut costs and automate jobs.” (Breeds fear and secrecy).

- New Narrative: “AI is your exoskeleton. It amplifies your skills. We want you to be the pilot.”

Leadership Visibility

I encourage CTOs and CEOs to use sanctioned AI tools publicly. Share your own prompts. Show the team that using AI correctly is a path to promotion, not a shortcut that suggests laziness.

Celebrate Safe Innovation

Create an internal “AI Wins” newsletter. Highlight a team that used the Enterprise Sandbox to save 20 hours of work. This validates the safe channel and encourages others to leave the shadows.

Preparing for the Future: From Shadow AI to Trusted AI

The organizations that win in 2025 won’t be the ones with the strictest firewalls. They will be the ones with the highest AI Velocity.

Shadow AI is a symptom of unmet needs. It signals that your workforce is hungry to innovate but lacks the tools. By converting Shadow AI into Sanctioned AI, you turn a security risk into a competitive advantage. You get the speed of AI with the security of enterprise governance.

Conclusion: Trust Is the Ultimate Security Layer

Technology alone cannot solve the Shadow AI crisis. You can block 1,000 domains, and a new one will pop up tomorrow. The only scalable security layer is Trust. When employees trust that IT is an enabler rather than a blocker, they bring their workflows into the light. They ask for permission. They use the secure sandboxes.

Don’t ban the future. Secure it. Are you ready to turn Shadow AI into a strategic asset? Download our free “Enterprise AI Acceptable Use Policy & Data Classification Template” to start building your S.A.F.E. framework today.

Visualization of the AI Trust Gap

| Report Source | Primary Stat | Core Warning |

| Menlo Security | 68% Shadow AI Usage | 80% of AI access happens via browsers. |

| Cyberhaven | 485% Data Increase | 83% of data flows to high-risk platforms. |

| LayerX | 1.5M Malicious Installs | Browser extensions are the primary leak vector. |

| Palo Alto Networks | 890% Traffic Surge | Organizations use an average of 66 AI apps. |

FAQs

What is Shadow AI in the enterprise? Shadow AI is the use of unauthorized artificial intelligence tools such as chatbots, coding assistants, or writers by employees without the explicit approval or oversight of the IT department.

Why is Shadow AI dangerous?

It creates significant risks including Data Leakage (sensitive info training public models), IP Loss (uploading proprietary code), Compliance Violations (GDPR/HIPAA breaches), and Security Vulnerabilities (malicious browser extensions).

How can companies detect Shadow AI usage?

Companies can detect Shadow AI by auditing web traffic logs (SWG/CASB) for AI domains, scanning for unauthorized browser extensions, and monitoring for anomalous spikes in data uploads or productivity speeds.

Can private or enterprise AI models leak data?

Generally, no if configured correctly. Enterprise agreements (like Azure OpenAI or AWS Bedrock) typically ensure that your data is not used to train the base model and is discarded after the session. This is why moving users to these paid tiers is critical.

Should organizations ban public AI tools entirely?

A complete ban usually fails and drives usage underground (Shadow AI). A better approach is to Sanction specific enterprise-grade tools while blocking risky, unknown consumer apps, creating a “Safe Harbor” for innovation.