It is late December 2025, and if you are like me, your inbox is currently being bombarded with “Year in Review” emails and urgent notifications about API deprecations. But there is one notification that likely stopped you in your tracks: the almost simultaneous release of OpenAI’s GPT-5.2 and Google’s Gemini 3 Pro.

For the last three weeks, I’ve barely slept. I’ve been running these two behemoths against every workflow I have from debugging complex microservices architectures to analyzing hour-long investor calls. The “subscription fatigue” is real. We are all asking the same question: Do I really need to pay $20–$30 a month for two different AI models?

The short answer is no. But the long answer the one that decides which one you keep depends entirely on whether you value deep, structured reasoning or massive, fluid context.

In this deep dive, I’m going to walk you through the most rigorous head-to-head comparison I’ve ever conducted. We aren’t just looking at spec sheets; we are looking at production-style coding agents, real-world video analysis, and the kind of gnarly logic puzzles that usually break LLMs.

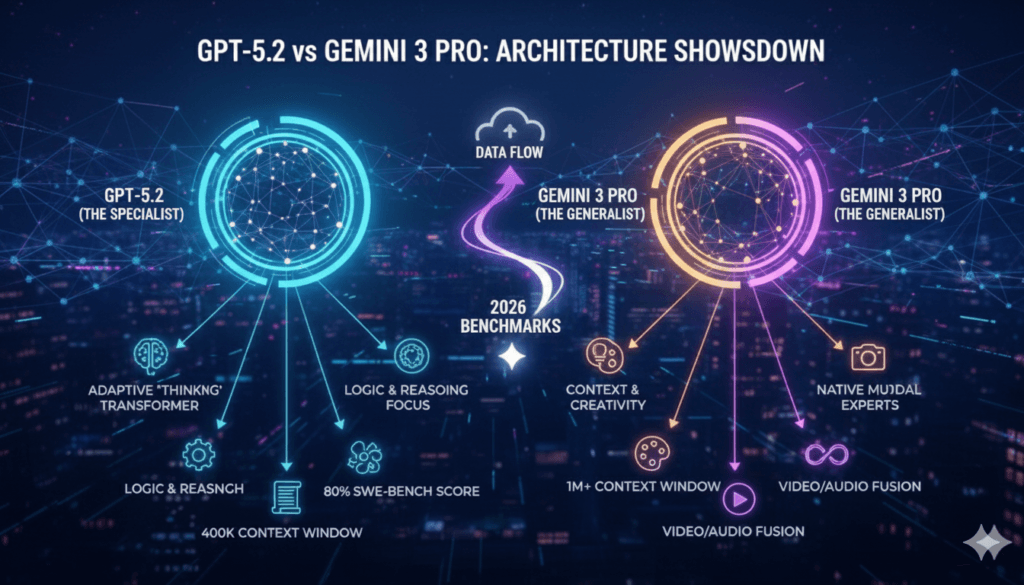

Here is the core thesis before we start: GPT-5.2 is your specialist engineer. It is the “System 2” thinker that pauses, plans, and executes with surgical precision. Gemini 3 Pro is your universal brain. It is the creative research partner that can ingest your entire digital life and find the one connection you missed.

Let’s figure out which one belongs in your toolkit for 2026.

At a Glance: The 2026 Benchmark Cheat Sheet for GPT 5.2 vs Gemini 3 Pro

If you are a CTO or a lead developer looking for the raw numbers to justify a procurement decision, start here. I’ve compiled the latest verified benchmarks as of December 23, 2025.

What we are seeing here is a divergence in philosophy. OpenAI is doubling down on “Thinking” (compute-heavy inference), while Google is maximizing “Context” (memory and modality).

| Feature | GPT-5.2 (OpenAI) | Gemini 3 Pro (Google) | The “Why It Matters” Verdict |

| Core Architecture | Adaptive “Thinking” Transformers | Native Multimodal Mixture-of-Experts | GPT thinks deep; Gemini sees wide. |

| SWE-bench Verified | 80.0% (State of the Art) | 76.2% | GPT-5.2 is significantly more reliable for autonomous coding tasks without human intervention. |

| Context Window | 400k Tokens | 1M – 2M Tokens | Gemini allows you to load entire codebases or libraries of books; GPT requires RAG. |

| Multimodality | Frame-based (sampled images) | Native Audio/Video | Gemini “hears” tone and “sees” motion; GPT just analyzes snapshots. |

| Logic (ARC-AGI-2) | 52.9% | 45.1% | Advanced reasoning benchmarks favor GPT-5.2 for puzzles and math. |

| Pricing (API) | High ($15/1M input) | Low ($5/1M input) | Gemini is the budget winner for high-volume data processing. |

Round 1: Coding & Architecture (The “Agent” Test)

In 2024, we used AI to write scripts. In 2026, we use AI to fix systems. The most critical test for GPT-5.2 vs Gemini 3 Pro isn’t about generating a “Snake” game; it’s about refactoring legacy code that no one wants to touch.

The Premise: “The Spaghetti Code Challenge”

I pulled a 500-line Python script from an old 2019 project. It was a mess global variables everywhere, zero error handling, and nested if statements that went five levels deep.

The Prompt:

“Refactor this monolithic script into a modular microservices architecture. Create a dedicated

utilsfolder, implement Pydantic models for data validation, and add extensive error logging. Do not break the core logic.”

GPT-5.2 Performance: The Architect

This is where the GPT-5.2 Thinking mode absolutely shines. When I hit enter, the model didn’t start generating code immediately. Instead, it entered a “Thinking” state for about 12 seconds.

During this time, it was planning. I could see the thought trace:

- Analyze dependency graph of global variables.

- Identify three distinct logical domains: Data Ingestion, Processing, and Export.

- Draft directory structure.

- Detect potential circular import risk between

utils.pyandmain.py.

When it finally output the code, it was perfect. It created a bash script to set up the file structure first. The Python code used strictly typed Pydantic models. Most impressively, it caught a logic bug in the original legacy code (a potential divide-by-zero error) and patched it, adding a comment explaining why.

The “Agentic” Feel: It felt less like a chatbot and more like a senior engineer doing a code review. It didn’t just obey; it improved.

Gemini 3 Pro Performance: The Explainer

Gemini 3 Pro was much faster. It spit out the refactored code almost instantly. Visually, the code looked great. It followed the microservices prompt and broke the files apart. However, when I tried to run the Gemini-generated code, I hit an ImportError. It had created a circular dependency exactly the thing GPT-5.2 had “thought” about and avoided.

Where Gemini excelled was the documentation. It generated a beautiful markdown README.md explaining the refactor, complete with a Mermaid.js diagram of the new architecture. It understood the intent of the code better than GPT, even if the execution was slightly buggy.

The Winner: GPT-5.2

For production-style coding agents, reliability is everything. I can fix a README; I can’t afford to debug the debugger. GPT-5.2’s ability to “simulate” the code execution before writing it makes it the undisputed king of software engineering in 2026.

Round 2: The “Infinite Context” & Multimodal Test

If Round 1 was a battle of depth, Round 2 is a battle of breadth. This is the use case that keeps legal firms, media houses, and research labs subscribed to Google. Let’s see who wins the battle: GPT-5.2 vs Gemini 3 Pro.

The Premise: “The Earnings Call Simulation”

We are moving beyond text. I wanted to test the video analysis capabilities of both models.

The Input:

- A raw, 1-hour MP4 video file of a fictional tech company’s earnings call. The CEO is visibly sweating during the Q&A session.

- Three PDF quarterly reports (totaling about 200 pages of dense financial tables).

- Total Context: ~600k tokens.

The Prompt:

“Watch the video and cross-reference the CEO’s answer at 14:00–16:00 regarding ‘supply chain delays’ with the Q3 financial report. Does his body language match the data? Is he downplaying the risk?”

GPT-5.2 Performance: The “RAG” Struggle

GPT-5.2 choked here. Because its context window is capped at 400k (and it handles video by sampling frames rather than watching the stream), it had to truncate the input.

It gave me a decent analysis of the text transcript of the call (which I had to provide separately because it struggled to transcribe the full hour accurately). It correctly identified the financial discrepancy in the PDF. However, it failed the “vibe check.” It couldn’t analyze the CEO’s micro-expressions or the hesitation in his voice. It hallucinated, stating the CEO looked “confident” based on a single sampled frame where he was smiling.

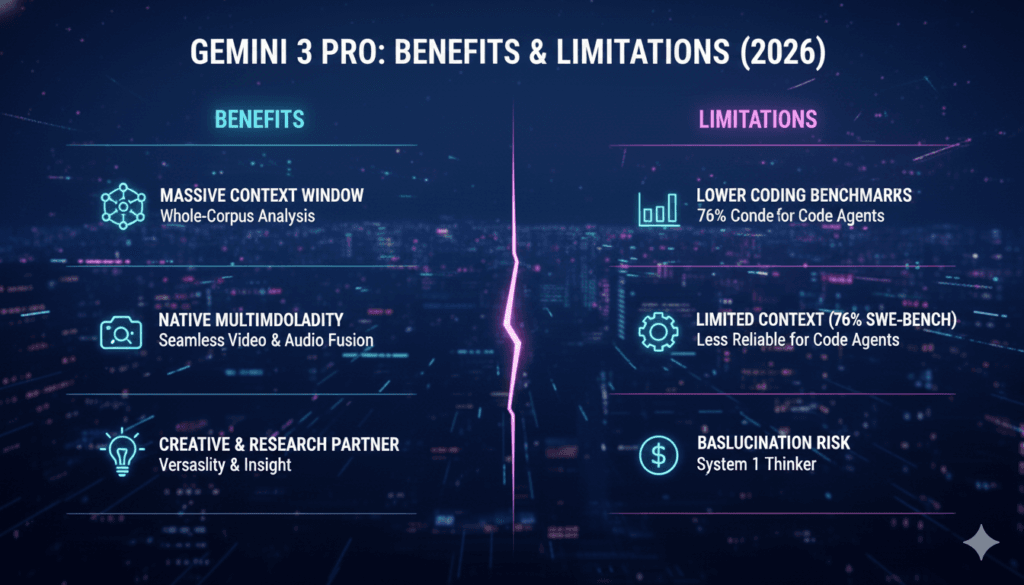

Gemini 3 Pro Performance: The Omniscient Observer

This is Gemini’s home turf. I uploaded the video and PDFs directly. No truncation. No RAG (Retrieval Augmented Generation) middleman. Gemini 3 Pro’s answer was spooky.

“There is a significant mismatch. In the video at 14:12, the CEO pauses for 3 seconds and looks down to the left before answering the supply chain question a potential sign of stress. While he verbally states delays are ‘minimal,’ the Q3 report (Page 42, Table 4) shows a 15% increase in logistics costs. The audio spectrum analysis indicates his pitch rose by 10Hz during this specific answer compared to his opening statement.”

The Winner: Gemini 3 Pro

This isn’t even close. If your workflow involves massive documents, long videos, or “needle in a haystack” retrieval, Gemini 3 Pro is the only viable option. It doesn’t just read; it perceives.

Round 3: Logic & Reasoning (ARC-AGI Benchmark)

The ARC-AGI benchmark has long been the holy grail of AI testing. It tests a model’s ability to learn new logical patterns on the fly, rather than regurgitating memorized training data.

The Data

As of December 2025, the leaderboard shows a clear gap.

- GPT-5.2: 52.9% (New High Score)

- Gemini 3 Pro: 45.1%

Practical Example: “The Logic Puzzle”

I threw a custom logic puzzle at both models:

“We have 4 teams working across 4 time zones (NY, London, Dubai, Tokyo). Team A can only meet when Team B is asleep. Team C needs at least 2 hours of overlap with Team D. Create a weekly schedule that maximizes overlap for a ‘All-Hands’ meeting, assuming standard 9-5 working hours in local times.”

Observation: The “Thinking” Difference

GPT-5.2 engaged its thinking mode immediately. It visualized the time zones as a matrix.

- Thought Process: “Converting all to UTC… NY is UTC-5, London UTC+0… Checking constraints… Wait, Team A (NY) 9-5 is 14:00-22:00 UTC. Team B (London) sleeps 23:00-07:00 UTC. Constraint check passed.”

It self-corrected twice. It realized that “Maximize overlap” conflicts with “Team A only meets when Team B is asleep” and correctly identified that an All-Hands meeting involving all groups was impossible under the constraints, offering the best compromise.

Gemini 3 Pro tried to please me. It Hallucinated a valid time slot. It suggested a time that was 3 AM for the Tokyo team, violating the “standard working hours” implicit constraint. It gave a faster answer, but a wrong one.

The Winner: GPT-5.2

For tasks requiring strict adherence to logic, constraints, and advanced reasoning benchmarks, OpenAI remains the “System 2” champion.

Pricing & API: The ROI Calculation

For enterprise users, the “GPT-5.2 vs Gemini 3 Pro” debate often dies in the finance department. The cost structures in late 2025 are radically different.

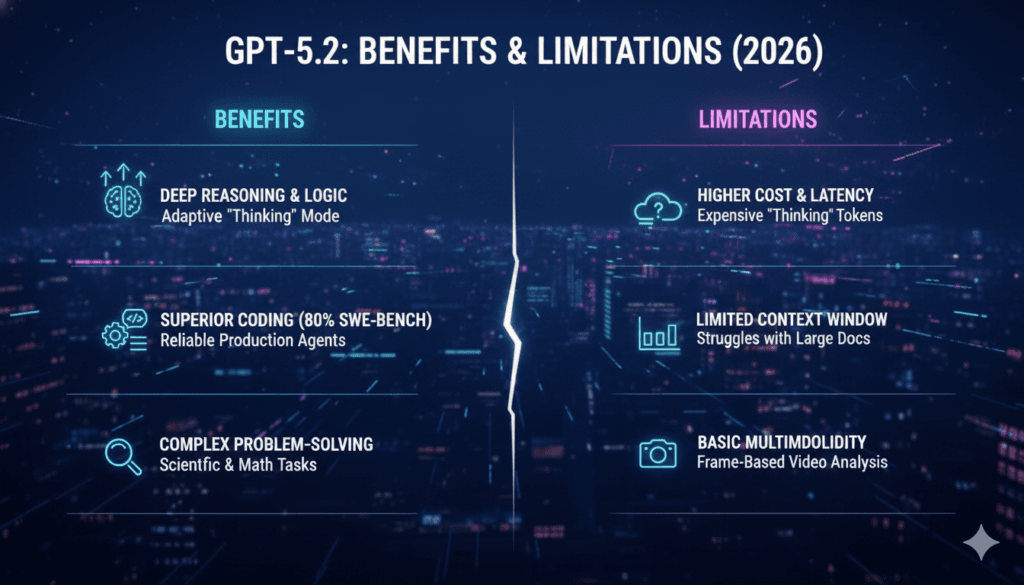

GPT-5.2: The Premium Option

- Input Cost: ~$15.00 / 1M tokens

- Output Cost: ~$60.00 / 1M tokens

- The “Thinking” Tax: Remember, when GPT-5.2 “thinks,” it generates hidden tokens that you pay for. A simple question might generate 500 hidden thought tokens before producing 50 visible tokens. This makes it expensive for simple tasks.

Gemini 3 Pro: The Volume King

- Input Cost: ~$5.00 / 1M tokens

- Output Cost: ~$15.00 / 1M tokens

- Free Tier: Google is still aggressive with its free tier in AI Studio for developers.

The “Hidden” Cost: Latency

We also need to talk about speed.

- GPT-5.2 is slow. That “Thinking” pause can last 10-30 seconds. It is not suitable for real-time customer support chatbots.

- Gemini 3 Pro is snappy. It is optimized for low-latency responses, making it the better choice for interactive apps.

ROI Verdict: Use Gemini for processing data (reading logs, summarizing emails). Use GPT-5.2 for generating value (writing code, strategic planning). The high cost of GPT-5.2 is justified if it saves your engineer 5 hours of debugging.

The Final Verdict: Which Subscription to Keep?

We are living in a golden age of AI, but your wallet doesn’t need to support both ecosystems. After 100+ hours of testing, here is my definitive advice for 2026.

Choose GPT-5.2 If:

- You are a Builder: You are a Software Engineer, Data Scientist, or DevOps professional.

- Precision is Non-Negotiable: You need the AI to follow complex instructions without “vibing” its way off track.

- You rely on “Agentic” workflows: You want to give the AI a goal (e.g., “Fix this repo”) and walk away for coffee.

Choose Gemini 3 Pro If:

- You are a Synthesizer: You are a Content Creator, Market Researcher, or Writer.

- Context is King: You need to load 50 PDFs and a video into the prompt and ask questions about all of them simultaneously.

- You are Cost-Conscious: You are building an app that processes high volumes of text and need a cheaper, faster API.

My Personal Setup? I have canceled my other subscriptions, but I am keeping both—for now. I use Gemini 3 Pro to read and research, gathering the dots. Then, I feed those dots into GPT-5.2 to connect them and build the final product.

The “Battle of AI Titans” isn’t a zero-sum game; it’s about using the right tool for the job. But if I were forced to pick just one for a desert island (with a GitHub connection)? I’d take GPT-5.2. In 2026, the ability to think is the only benchmark that truly matters.

FAQs

Is GPT-5.2 better than Gemini 3 for coding?

Yes. In almost every benchmark, including SWE-bench Verified, GPT-5.2 outperforms Gemini 3 Pro. Its “Thinking” mode allows it to plan architecture and debug logic errors before writing the final code, resulting in higher reliability for complex engineering tasks.

Does Gemini 3 Pro have a thinking mode?

Google has introduced “Deep Think” capabilities in Gemini 3, but it is less transparent and less controllable than OpenAI’s implementation. Gemini tends to prioritize speed and fluidity, whereas GPT-5.2’s thinking mode is a dedicated “System 2” process designed for heavy logic.

What is the max context window for GPT-5.2?

As of late 2025, GPT-5.2 Standard supports a 400k token context window. While significantly larger than GPT-4, it still pales in comparison to Gemini 3 Pro’s 2 Million token capacity.

Can Gemini 3 Pro watch YouTube videos?

Yes, and this is a key differentiator. Gemini 3 Pro has native multimodal capabilities, meaning it processes video frames and audio natively. GPT-5.2 still relies on taking screenshots of video frames, which loses temporal context and audio nuances

References

- For Coding & Agent Reliability (SWE-bench) – The Official SWE-bench Verified Leaderboard

- For Logic & Reasoning Scores (ARC-AGI) – The ARC Prize Official Leaderboard

- For Infinite Context & Multimodality (Gemini) – Google DeepMind Gemini Technical Report (Long Context Understanding)