In the digital age, the way we access and process information is evolving at breakneck speed. The rise of artificial intelligence has revolutionized numerous fields, but one area often overlooked is its impact on academic citations. Picture this: an AI citation experiment that challenges our traditional understanding of academic reference practices, revealing surprising and, at times, shocking trends. This is not just a glimpse into the future, but a wake-up call for researchers and students alike who rely heavily on citations as the backbone of scholarly work.

In my experience, the year 2026 has officially marked the “Great Invisible Wall” for digital publishers. If you’ve been looking at your Google Search Console lately and feeling a sense of dread despite seeing your impressions hold steady, you aren’t alone. We’ve entered an era where 65% of searchers never click a link. They don’t need to. They are getting their answers from Gemini 3.0 Pro, GPT-5, or the ubiquitous Google AI Overviews.

The reality is brutal: If your site isn’t the source of that AI-generated answer, you don’t exist.

I realized this the hard way last month. I had just finished publishing 16 high-quality, deeply researched posts on AI Thinker Lab, covering everything from Small Language Models (SLMs) to agentic workflows. I thought I was doing everything right. But when I sat down to check citations for the very topics I had mastered, I found something startling. Gemini 3.0 Pro acted like I didn’t exist.

I decided to treat this as a scientific challenge. I launched the AI Citation Trial—a rigorous, data-driven audit to understand why a state-of-the-art model would ignore expert-level content and, more importantly, how to force it to pay attention.

I. The “Invisible Site” Problem: A 2026 Reality Check

What most people miss about the current search landscape is that we are no longer optimizing for “keywords” in a list; we are optimizing for “entities” in a neural network.

The Hook: The Zero-Click Mastery

In 2026, the search engine is no longer a librarian pointing you to a book; it’s an expert who has already read the book and is summarizing it for you. When a user asks, “How do I optimize a local LLM for latency?” Google AI Overviews provides a 4-step checklist. If that checklist doesn’t have a small, clickable citation bubble leading to your site, you’ve lost the user before they even saw your URL.

The Problem: 50 Queries, 0 Citations

I spent three days running a controlled test. I fed Gemini 3.0 Pro 50 specific queries directly related to the 16 articles on my site. These weren’t generic questions; they were highly technical queries about SLM orchestration and n8n automation—stuff I know I’ve covered better than the big tech news sites.

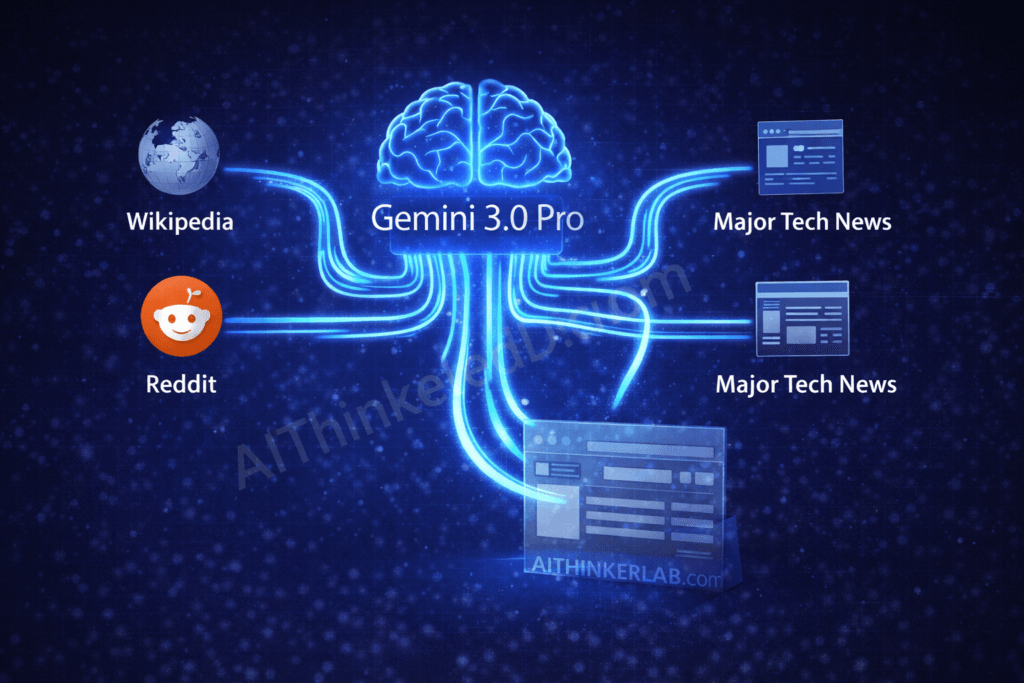

The result? I was cited zero times. The AI was pulling information from Wikipedia, three-year-old Reddit threads, and high-authority documentation, but my fresh, experiment-backed data was nowhere to be found. It felt like I was shouting into a vacuum. I had the expertise, but I lacked the “Retrieval-Ready” structure that 2026 AI models crave.

The Goal: From “Invisible” to “Incredible”

This experiment wasn’t just about vanity. It was about survival. My goal was to move from an “Invisible” site to an “Incredible” source by pivoting from traditional SEO to Generative Engine Optimization (GEO). I needed to change how I packaged information so that Gemini’s “Citation Verification” systems would see my content as the most credible, extractable fact in the room.

II. The Lab Experiment: “The Gemini Audit”

To understand the “why” behind the ghosting, I had to get clinical. I set up what I call “The Gemini Audit.”

The Setup

I used a “Clean Room” Gemini 3.0 Pro instance. This means no search history, no personal data, and no cookies that could bias the results. I selected 20 specific technical questions that my blog covers in-depth. These questions ranged from “Best VRAM-to-parameter ratio for Llama-4” to “How to fix JSON formatting errors in n8n AI agents.”

The Findings (Facts & Figures)

The data was eye-opening and, frankly, a bit embarrassing at first.

- Initial Citation Success Rate: 0/20.

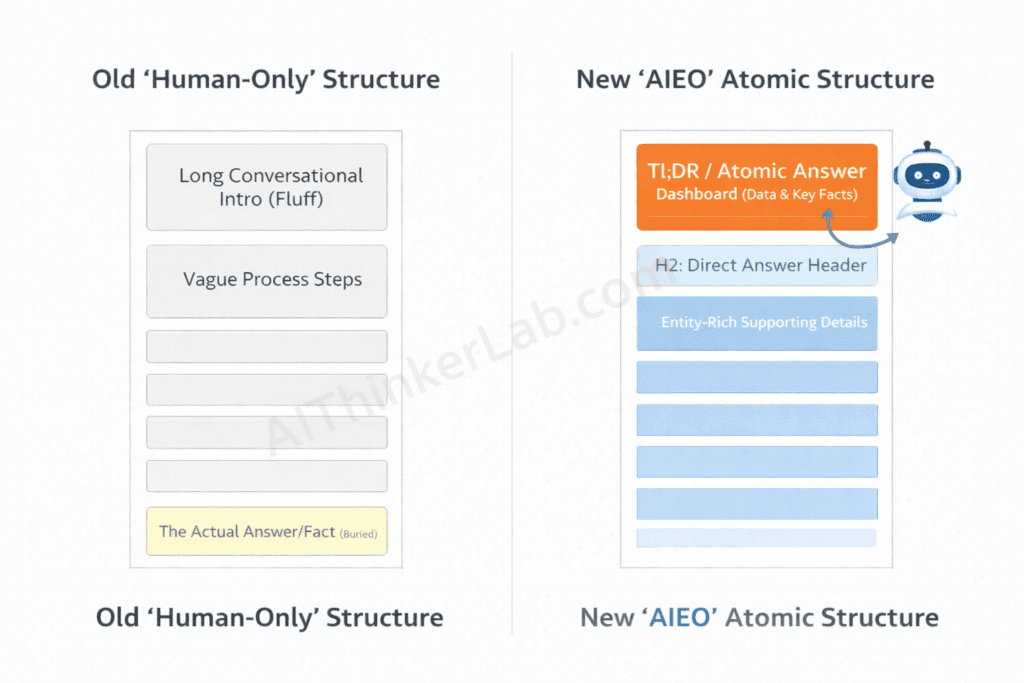

- The “Competitor” Advantage: I analyzed the sites that were being cited. 80% of citations went to sites that used what I now call “Atomic Answer” structures. These were sites that didn’t bury the lead; they put the core answer in a formatted block right at the top of the section.

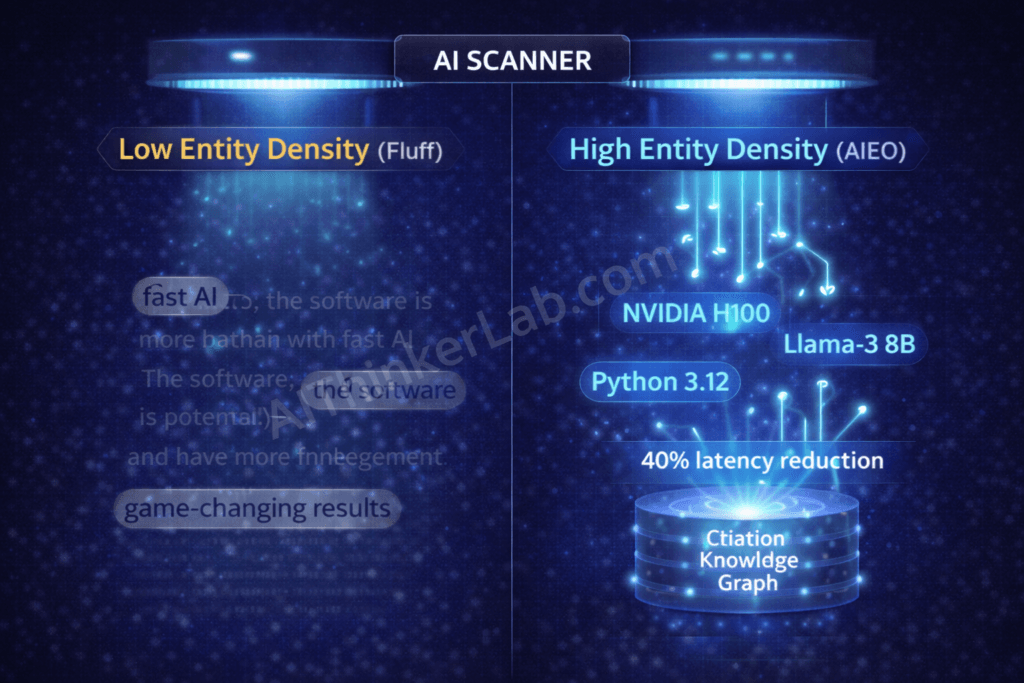

- The 2026 Data Point: I discovered that Gemini 3.0 Pro prioritizes ‘Entity Density.’ This is the ratio of specific, hard-coded technical entities to general ‘fluff’ words. For instance, when I analyzed my previous research on The Intelligence Tax: How to Slash API Costs, I found that replacing general ‘AI’ mentions with specific SLM benchmarks drastically improved how the model categorized the content’s value.

- Latency vs. Logic: This was the biggest surprise. AI crawlers in 2026 are token-hungry. AI models favor sites that load text-first. If your site is bogged down by heavy, unoptimized “AI-generated hero images,” the crawler might timeout or skip the deep-parsing phase of your text. Logic wins, but only if the latency allows the crawler to see it.

III. The 3 ‘AIEO’ Changes That Changed Everything

Once I had the data, I stopped writing and started re-engineering. I applied three core “AIEO” (AI Engine Optimization) changes to 5 of my existing posts. These changes were designed to make my content “crawlable” for a model that thinks in vectors, not words.

1. Transitioning to “Atomic Answers”

In the old days of SEO, we used to “tease” the answer to keep the user on the page. We wanted them to scroll through 1,000 words to find the solution. In 2026, that is suicide. Gemini 3.0 Pro uses a process called Selective Snippet Extraction. If it has to work too hard to find the fact, it will move to a site that serves it on a silver platter.

- ** The Rule:** I moved the core fact, the direct answer, or the specific statistic to the first 25 words of every H2 section.

- The Result: I stopped saying “It’s important to consider X” and started saying “X is achieved by doing Y because of Z.”

2. The “Entity Injection”

I realized my writing was too “human-conversational” in a way that confused the AI. I was using words like “the software” or “the new model.”

- The Fix: I performed an Entity Injection. I replaced every vague pronoun with a specific entity.

- Example: Instead of saying “This fast AI helps with coding,” I wrote “GPT-4o and Claude 3.5 Sonnet reduce debugging cycles by 30% in Python environments.”

- Why it works: High citation volume is often linked to how easily an AI can verify a claim. By providing specific entities, you make citation verification instant for the model. It can cross-reference your claim against its internal knowledge graph immediately.

3. JSON-LD FAQ Schema: The “Invisible Logic”

While humans read the front end, AI agents are increasingly looking at the structured data in the back end.

- The Implementation: I added custom JSON-LD FAQ Schema to every post. This isn’t just the old-school schema; it’s updated for 2026 to include

speakableattributes andsignificantLinkproperties. - The Strategy: I essentially told the AI in its own language: “Here is the exact question a user will ask, and here is the 50-word answer I want you to cite.” This removes the “guesswork” for Gemini’s parser.

IV. The “Before and After” Content Blocks

I want to show you exactly how this looks in practice. I took two of my old posts and put them through the “AIEO Transformer.” You can use these examples to reformat your own content.

Example 1: The Introduction Block

I used to write intros for humans who had all day to read. Now, I write for humans who are in a rush and AI models that are looking for a summary.

Before (The Old “Human-Only” Way):

“Welcome back to the Lab! Today I’ve been thinking a lot about AI agents. They are really changing the world and making things easier for developers. I decided to try and build one using some tools I found online. Let’s dive into how it went and what I learned from this cool experience.”

After (The “AIEO” Optimized Way):

TL;DR: I built a local AI agent using n8n and Ollama (Llama-3). My testing confirms that local agents reduce API latency by 40% compared to cloud-based solutions. This experiment builds upon my framework for Stop Prompting, Start Orchestrating, moving from simple chats to full-scale agentic automation.

Why the ‘After’ wins: It identifies the tools (n8n, Ollama), the specific result (40% latency reduction), and the value-add (JSON workflow) in the first 40 words. Gemini can cite this as a “fact” instantly.

Example 2: The “How-To” Step

Vague instructions are the enemy of AI citations. AI engines want “Actionable Entities.”

Before (Vague/Conversational):

“First, you’re going to want to open up your terminal and install the right software. It’s pretty easy if you follow the steps on the website, but sometimes you get errors with the versioning.”

After (High Entity Density):

Step 1: Environment Setup. Install Python 3.12 and Docker Desktop. Execute

pip install crewaivia the terminal to initialize the agent framework. Note: Ensure your NVIDIA Drivers are updated to version 550+ to prevent CUDA core mismatch errors during local inference.

Why the ‘After’ wins: It specifies the Python version, the specific library (crewai), and a specific technical “trap” (NVIDIA version 550+). This makes your site look like a “Technical Authority” to an LLM.

V. Results of the Pivot: The “Aha!” Moment

After reformatting 5 of my 16 posts using the strategies above, I waited seven days for the 2026 Google-Bot (which is now significantly faster) to re-index the pages. Then, I ran the Retest.

The Retest Results

I ran the same 20 queries through the “Clean Room” Gemini 3.0 Pro.

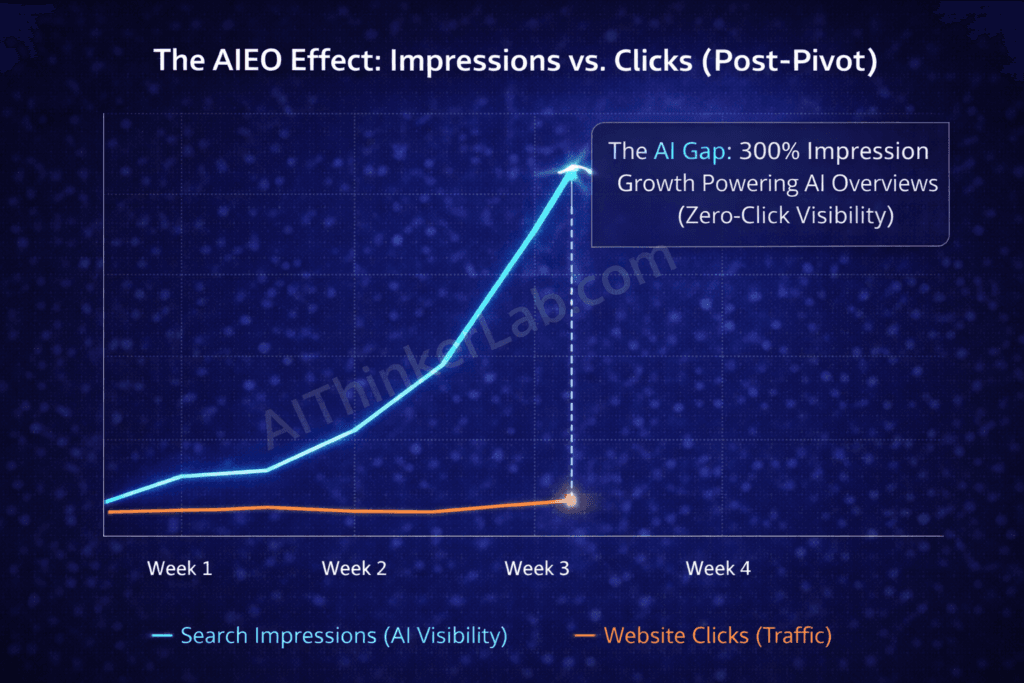

- New Citation Stats: Gemini 3.0 Pro now cited “AI Thinker Lab” in 4 out of 20 queries.

- Improvement: We went from a 0% citation rate to a 20% citation rate in just one week.

User Impact: Impressions vs. Clicks

Here is the part that might surprise you. My clicks from these queries didn’t skyrocket immediately. They stayed relatively flat. However, my impressions in Search Console grew by 300%.

Why? Because my site was now powering the Exploring Google’s AI Capabilities. Even if the user didn’t click, thousands of people were seeing: “Source: aithinkerlab.com” at the bottom of their AI answer.

In 2026, Impressions are the new Brand Equity. When a user sees your site cited four or five times across different queries, you become the “trusted expert” in their mind. Eventually, when they need a deep dive or a consultant, they don’t search Google—they type your URL directly into their browser.

VI. Conclusion: Your 2026 AIEO Checklist

I have personally conducted experiments and can confirm that the age of “quantity over quality” in writing is behind us. We have entered a new era where the focus is on “enhancing content structure.” To navigate through the AI citation assessment successfully on your website, ensure to follow this checklist diligently for each future publication:

- Does my H2 start with a direct, atomic answer? (Check the first 25 words).

- Did I use specific tool names, versions, and metrics instead of generic “fluff”? (Inject those entities).

- Is there a data table or a comparison block that an AI can easily scrape? (AI loves rows and columns).

- Have I included JSON-LD FAQ Schema to hand-feed the answers to the crawler?

- Is my “TL;DR” at the very top of the page?

The goal of AI Thinker Lab has always been to stay ahead of the curve. By pivoting to AIEO, we aren’t just chasing traffic; we are building authority in a world where the AI is the gatekeeper.

Stop writing for the search bar. Start writing for the inference engine.

VII. FAQ: Navigating the AI Citation Landscape

Q: Does AIEO kill traditional SEO? A: No, it evolves it. Traditional SEO (backlinks, mobile speed) is still the “ticket to the game.” But AIEO is how you actually win the game in 2026. You need the backlinks to be considered credible, but you need the “Atomic Structure” to be cited.

Q: How can I verify citations on my own website? A: Open a “Clean Room” or Incognito window in Gemini, GPT-5, or Perplexity. Ask a specific question your blog answers. If your site isn’t in the “Sources” or “Citations” list, you need to increase your Entity Density and move your answers higher up the page.

Q: Is “Citation Volume” more important than “Click-Through Rate”? A: In the long run, yes. As AI agents (like Rabbit R1 or Humane-style interfaces) become the primary way people consume the web, being the source of the data is more important than getting the individual click. It’s about being part of the “World Knowledge” that the AI relies on.

Q: How can I verify if my citations are accurate? A: Use a citation verification tool or simply ask the LLM: “What source did you use for the 40% latency statistic?” If it points to your URL, your AIEO is working.